|

|

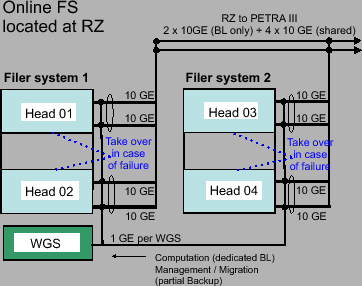

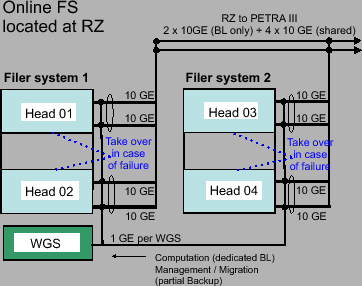

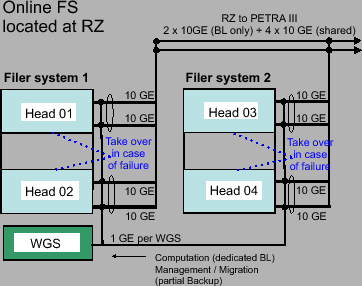

GE network interfaces (cumulative) that are managed by two processors, see figure 3.1. Each system is redundant to cope

with hardware failures by take over option, i.e. if head 1 fails then head 2 do the work for all

attached storage to the system3.1. Another safety measure is the 'snapshot' feature. It uses 20 % of the available disk space for a system that allows - withing given limits

- users to retrieve files that have accidentally been deleted.

Currently, the system creates snapshots (a ‘data freeze’) similar a cascade: each four hours a

day for the systems takes snapshots hourly.

GE network interfaces (cumulative) that are managed by two processors, see figure 3.1. Each system is redundant to cope

with hardware failures by take over option, i.e. if head 1 fails then head 2 do the work for all

attached storage to the system3.1. Another safety measure is the 'snapshot' feature. It uses 20 % of the available disk space for a system that allows - withing given limits

- users to retrieve files that have accidentally been deleted.

Currently, the system creates snapshots (a ‘data freeze’) similar a cascade: each four hours a

day for the systems takes snapshots hourly. and once a day a daily.

and once a day a daily. is made at midnight.

Each Sunday a weekly.

is made at midnight.

Each Sunday a weekly. snapshot is taken and the entire procedure repeats for up to five

weeks. Thus, the status of the files is frozen each four hours a day and kept for a day. The

daily status is taken at midnight and kept for for seven days and so on.

snapshot is taken and the entire procedure repeats for up to five

weeks. Thus, the status of the files is frozen each four hours a day and kept for a day. The

daily status is taken at midnight and kept for for seven days and so on.

The disk space itself is organized in aggregates and volumes. An aggregate is assigned to a compound of disk drives which contain the volumes. A single volume represents the the specific beamline storage and thus, Photon Science PETRA III Beamlines share storage and bandwidth. The Online FS volumes can be accessed from Linux and Windows and has standard Unix rights user/group/others.

The Online Fileserver is set up in four aggregates3.2 which

establish the storage for the PETRA III beamlines. A single aggregate is created from multiple

hard disk drives spanning over different disk trays. The aggregates are created as Raid 6

arrays with spare drives.

Tests on a single Fileserver head (10GE connect) resulted

When the system was bought, four beamlines claimed higher data rates. The volume foreseen

for each of those so called hight throughput beamlines was assigned to one of the

four aggregates which is managed by a file server head. The remaining volumes of beamlines

having lower data rates were assigned according to total aggregate size. The current

assignment is given below.

|