The current situation and its issues¶

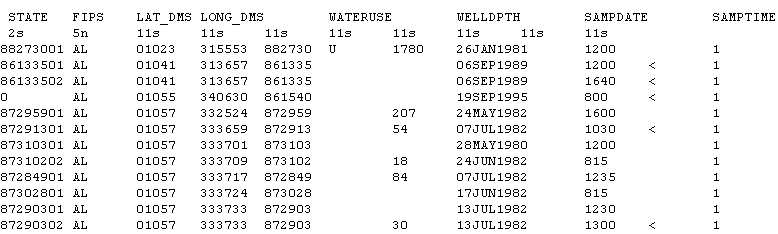

Traditionally data recorded on synchrotron radiation facilities is stored in ASCII files with the data organized in tabular form. Every file can store one or more tables and every row in a table represents a single point of the measurement the table belongs to. Additional meta-data, like the initial motor positions, is stored either in a file or table header, for instance as a set of key/value pairs.

Traditionally data has been stored in ASCII files at synchrotron. The data was typically organized in tabular form.

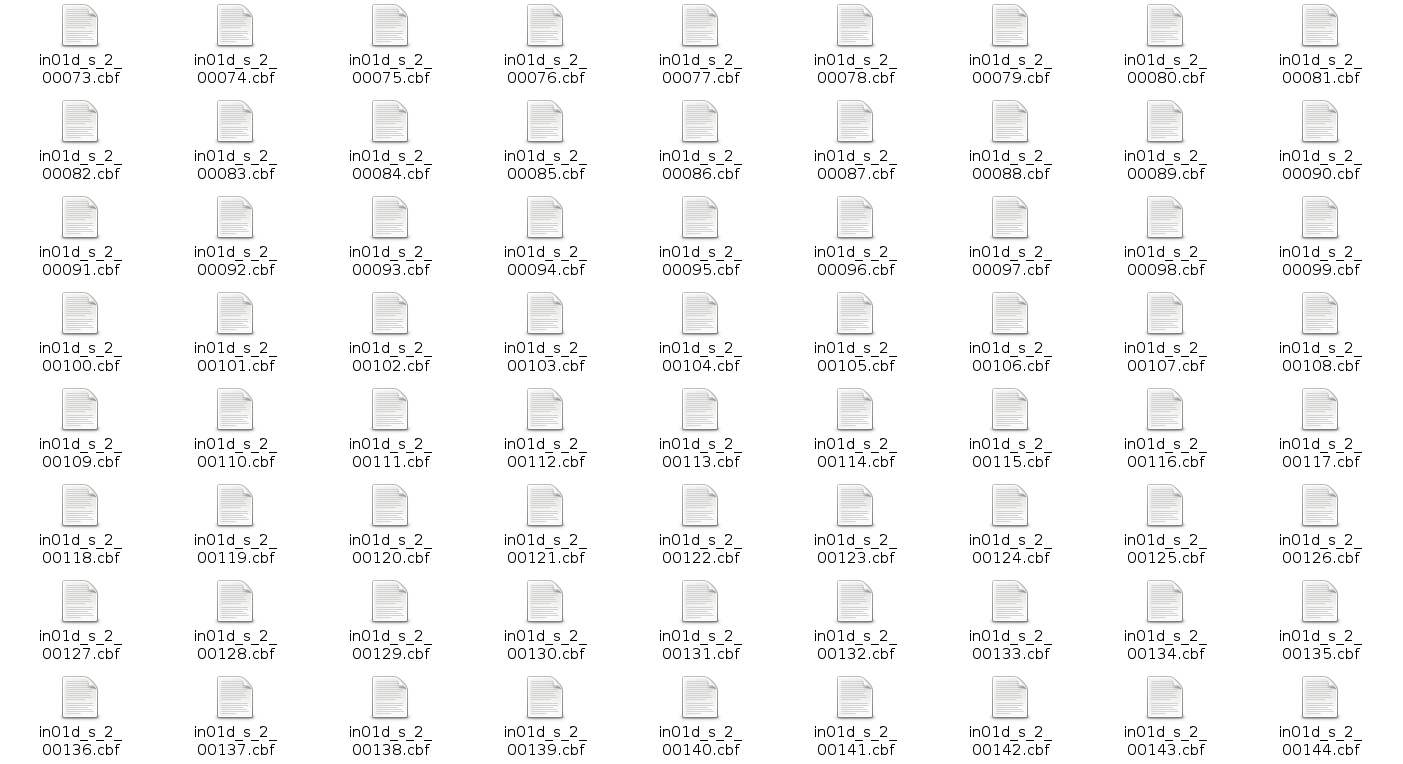

With the increasing application of 2D detectors to record data, ASCII files became problematic. Storing large 2D arrays in ASCII files produces a huge overhead and incredible large files which would reduce the IO performance severely. To overcome this problem binary file formats like CBF or EDF have been developed by the community or common image formats like TIF are used to store the data produced by 2D detectors. Common to all approaches is, however, that every file stores only a single image.

All binary formats used for 2D detectors where typically designed to store a single image per file. For long runs or experiments recording data at very high rates this leads to a large number of individual files.

Until now many experiments combine both approaches: using plain ASCII files to store meta-data (like motor positions) in tabular form while storing the data from 2D detectors in separate directories following the one file per image paradigm. This approach of storing data has lead to several complications in particular if the number of images (or more general the amount of data recorded) becomes large

- multiple files must be managed for a single experiment. For instance a single ASCII file with meta-data like motor positions, temperature, etc. and a large amount of individual image files typically stored in a separate directory. Another situation (even worser) would be if we write a small single ASCII file along with each image file to keep records of the meta-data associated with each image.

- during data analysis all the individual images must be read one after each other which causes a lot of rather expensive file handler operations.

- most file systems show a performance degeneration if the number of individual files within a directory exceeds a particular critical value.

- code to import the data into the analysis software must be written and, even more important, maintained for each individual file format. As some of these formats are not well defined, it is sometimes necessary to take care about the peculiarities of individual beamlines though they claim to use the same file format. In particular the maintenance efforts can become and issue as many academic employees only have a temporary working contract and thus leave after a short period of time to a new job leaving the code they’ve developed unmaintained (and in many cases undocumented).

Aside from this current problems new challenges lie ahead. In particular the amount of meta-data which should be stored during an experiment will increase dramatically due to two major developments

- data should be analyzed fully automatically, at best right after its acquisition. This implies that all the information an analysis tool-chain requires is available in the data file(s).

- data used for scientific publications should be cite-able and made available the reader of a particular paper.

With this old and new challenges in mind it is quite natural that the community is looking for new solutions how to store the data recorded during an experiment. During the last years the community came to an agreement (well sort of) that the NeXus data format using HDF5 as its physical file format shall replace most of the current ASCII and binary formats.

The next sections will provide an introduction into HDF5 and NeXus.